News

Anthropic gets lucky?

We had previously covered how Anthropic was getting sued for copyright infrinegemnt due to their illegal procurement of books to be used as training data (they pirated them).

The potential range for the fine was from $1 billion all the way up to $750 billion (theoretically, no judge would actually delivery that harsh of a fine).

This week the actual number was made known to the public, and it was $1.5 billion. In the grand sceme of things, this is a relatively good outcome for Anthropic, as its very close to the minimum they could have bene fined, but also it is still the largest copyright lawsuit of all time.

This totals to about $3000 per work that they pirated, which doesnt sound all that bad on its own until you relaize that they pirated around 500,000 books (and other materials) that were under copyright protections. The payments will not being going to any large corporations, but rather the individual authors whose books were involved as a part of the class action lawsuit.

This fine, while big, will not cripple Anthropic, especially considering that they just raised a $13 billion series F at a $183 billion valuation. This does however send a message to the rest of the AI world, letting them know that they can and will get fined for illegally aquairing the datasets that they train on.

I don’t think this will cause a change in their actions however, they will just take their OpSec around their data gathering practices far more seriously in the future instead of stopping since the value they get from this extra data is immense. Smaller companies will have to monitor their data much more closely, as they cannot absorb a fine of this size, but all of the large competitors like Google and OpenAI can absorb a fine like this far more easily, making it a much less risky play for them.

Releases

$3/month Claude Code subscription?

Claude Code was released back in May of 2025 and since then has gained a large userbase, due to the clean terminal interface and top tier preformance as the environment was custom made for Claude.

As time has gone on however, we have seen a variety of competitors, inlcuding GPT 5 and the Codex CLI, and also open source models like GLM 4.5, Qwen3 Coder, and Kimi K2 that all claim similar if not better performance that Sonnnet in Claude Code.

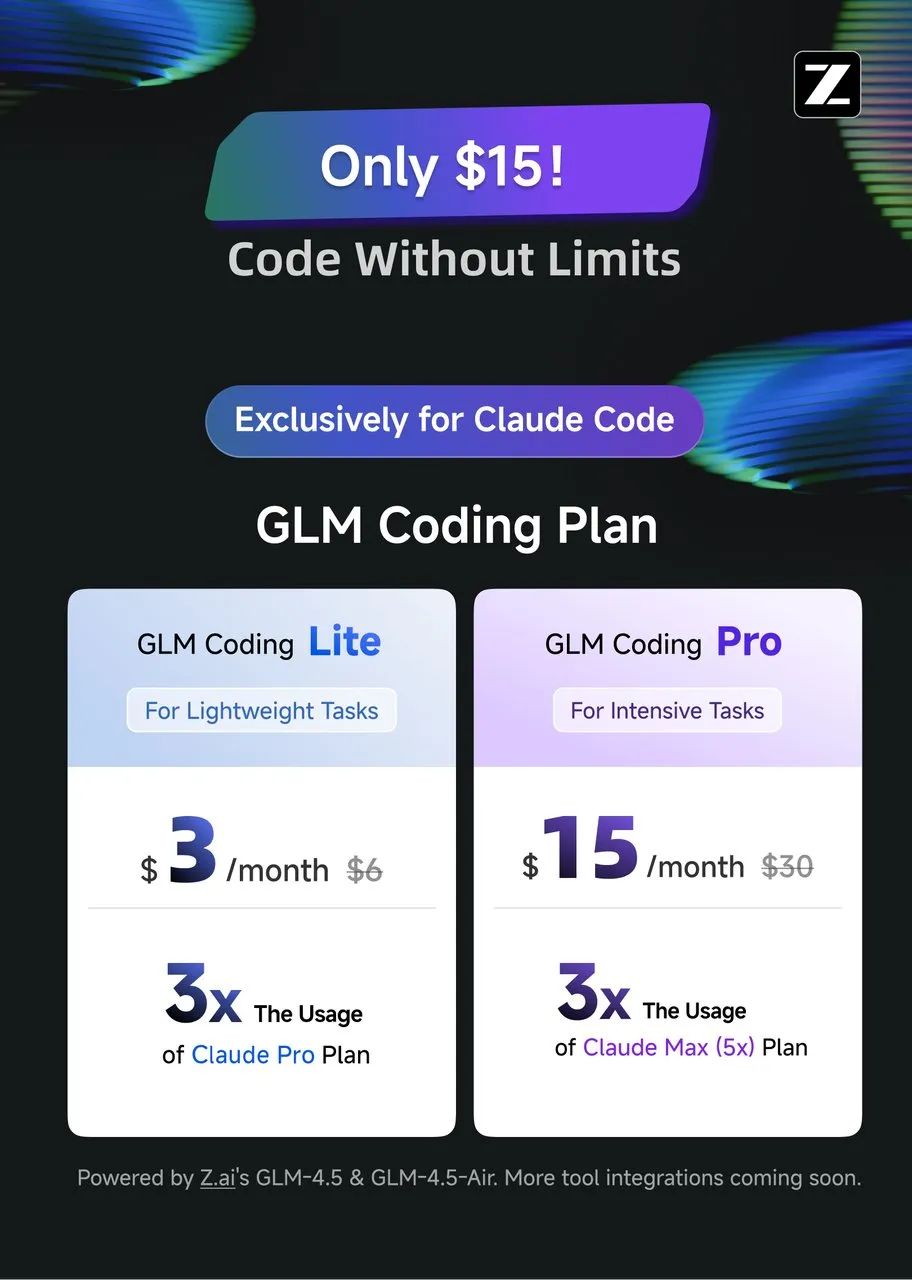

To add to the enticing offerings, Z.ai, the company that made GLM 4.5, are now offering a monthly subscription plan similar to Claude code, except at over 5x lower cost.

For just $3 a month, they are offering 3x the usage of the $20/month plan from Anthropic. This also comes with very clear usage limits of 120 messages every 5 hours, something that Anthropic has not defined and is very vauge about, so you are unsure how much you will be able to use Claude for a given session.

They have updated their endpoints to be directly compatible with Claude Code, so you just need to set two environment variables as shown in their docs and then you can be off to the races coding with GLM 4.5.

I have found GLM 4.5 to be the best open source competitor to Claude Sonnet, and also much faster as well, which has been corroborated by others. GLM 4.5 also topped the Berkley Function-Calling Leaderboard this week, further showing its tool use prowess, which is a big indicator for real world coding performance.

If you have not had a chance to try out GLM 4.5 or Claude Code yet, this is a great opportunity to get your feet wet with the new model and coding framework!

Qwen3 Max

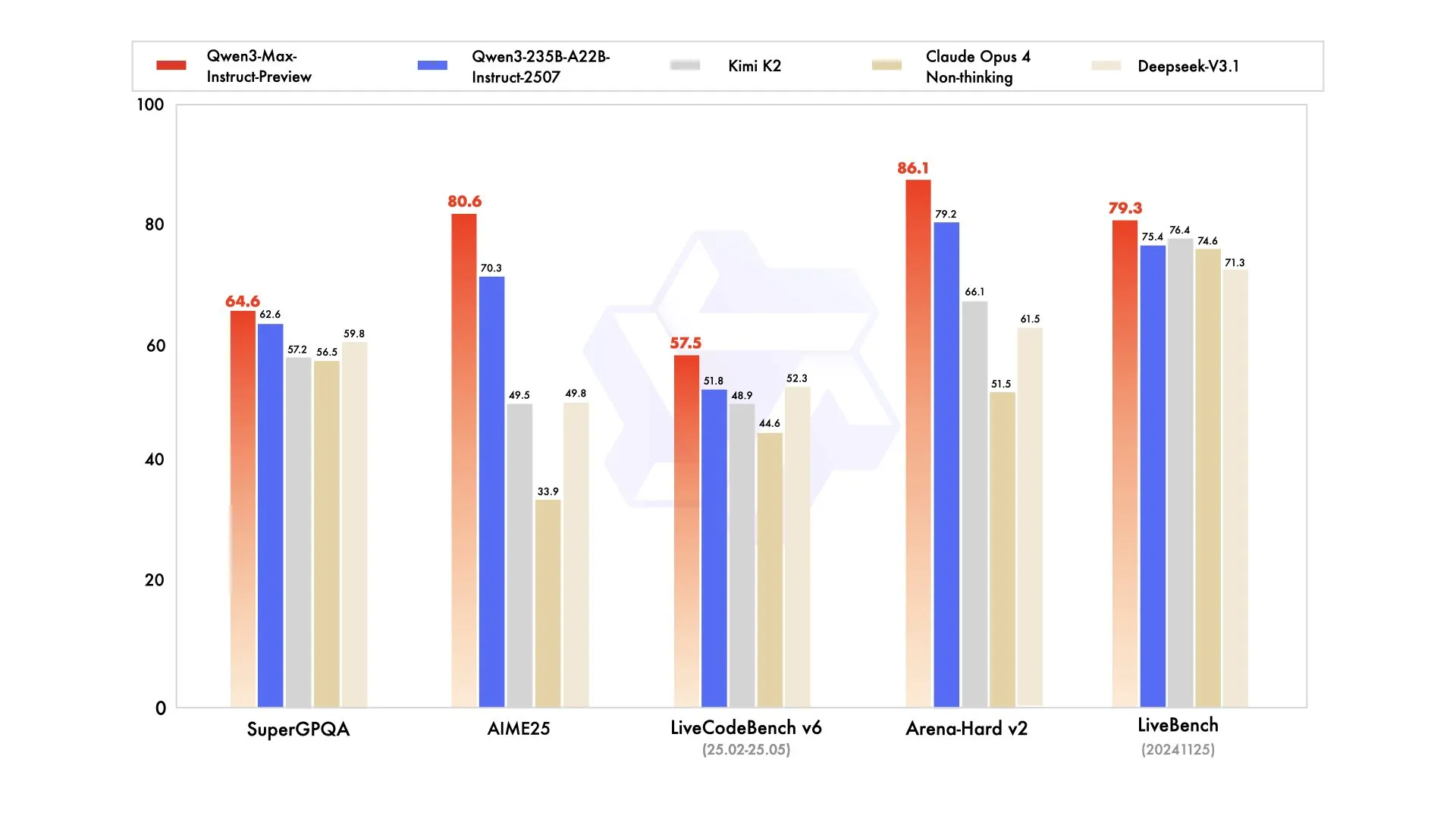

Qwen has decided to drop a doozy for their weekly release, adding the biggest model to their Qwen 3 lineup of models, a 1 trillion parameter beast called Qwen Max.

The model is a departure from their typical releases as it is closed source for now, although they say that it will be released as open source in the future, as this current iteration is just a preview.

The Qwen team say that this model gives them hope for scaling both now in the future in terms of model size and also data size, and that the Qwen Max model is much smarter than even the benchmarks reflect.

Kimi K2 Update

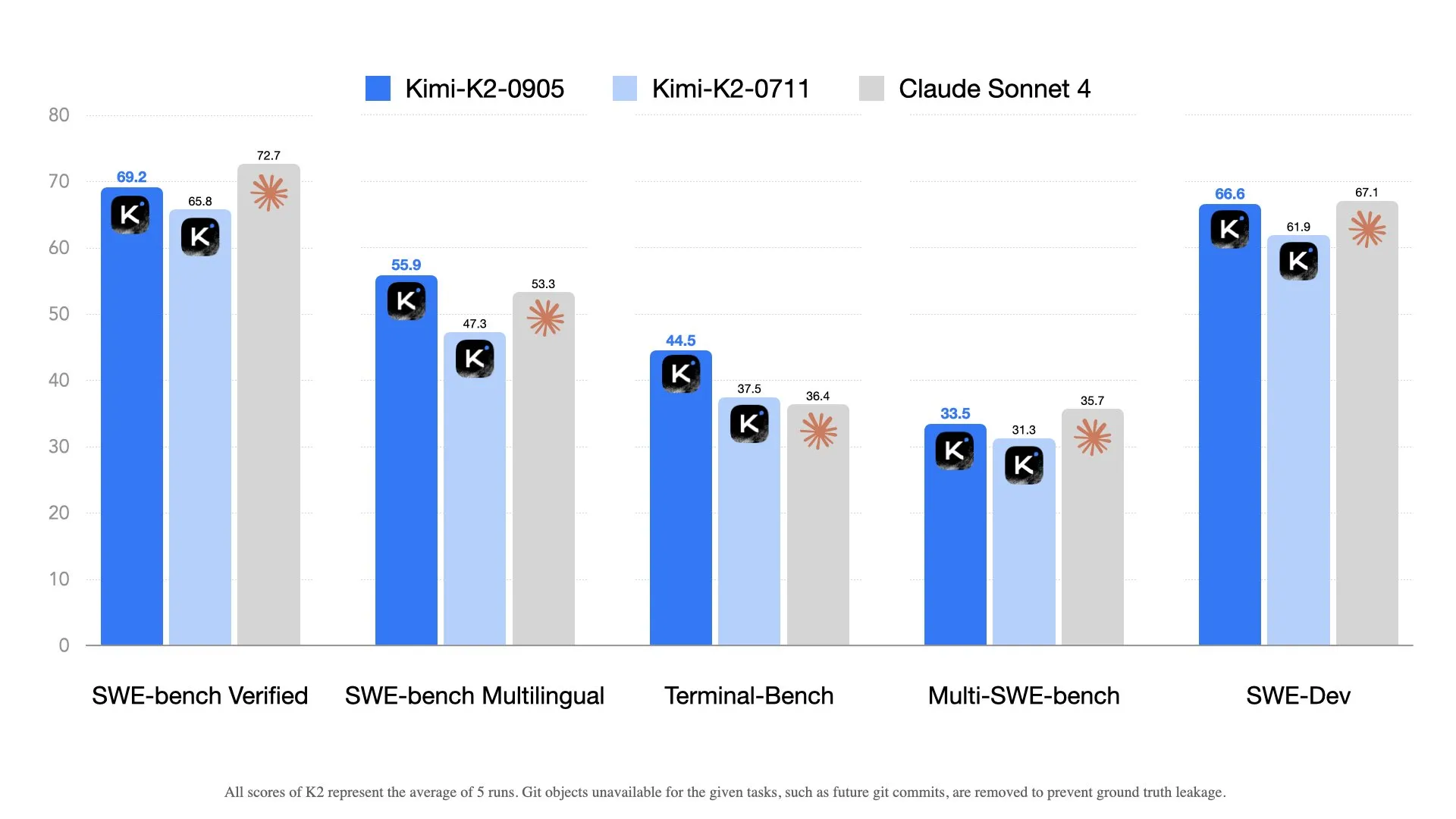

The Moonshot AI team has released an update to their already very strong Kimi K2 model. This update focused primarily on coding abilities and increasing its context length, which allows it to have better performance in different agentic coding scaffolds like Claude Code or Roo code.

This release comes in a response to both DeepSeek and Z.ai’s recent releases, which have directly targeted agentic coding capabilities.

Research

New agentic coding benchmark

With coding being one of the largest use cases for LLMs right now, we are constantly in need for more benchmarks to measure the differences between all of these models claiming to be the best.

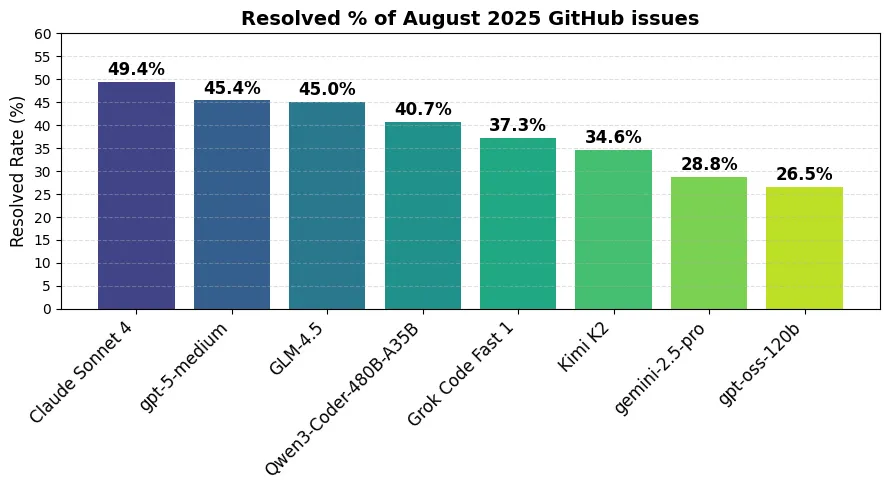

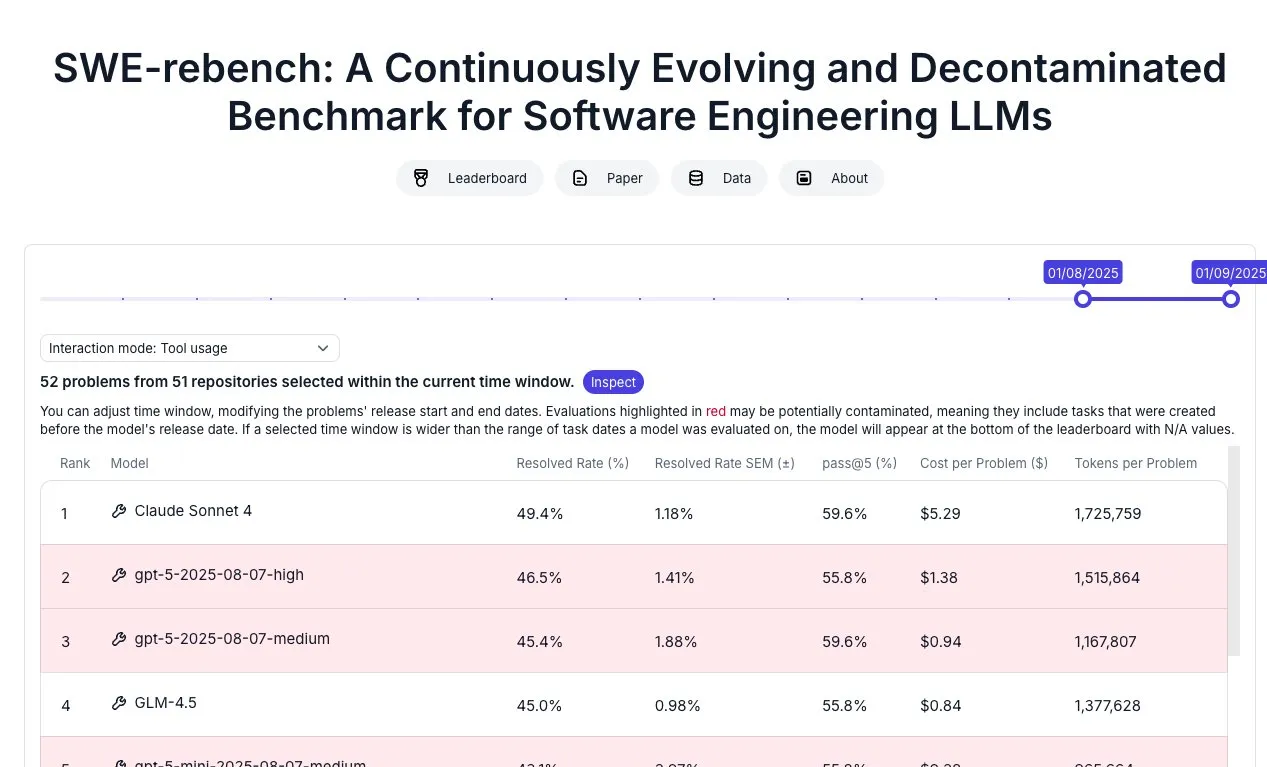

We have got one of these benchmarks with SWE-Rebench being released comparing a wide range of top closed and open source coding models.

Claude remains the best model, but is closely followed behind by GPT-5 and GLM 4.5. What is interesting to see is how cheap GPT-5 is compared to the open source models. Usually we expect closed source models like Claude and GPT-5 to be much more expensive than models like GLM 4.5 or To be much more expensive than models like GLM 4.5 or Qwen3 Coder. But for this benchmark, we see that GLM 4.5 is roughly the same cost as GPT-5 Medium.

What was also surprising was the performance of GPT-5 Mini coming in fifth place right behind GLM 4.5 and ahead of Qwen3 Coder.

Finish

I hope you enjoyed the news this week. If you want to get the news every week, be sure to join our mailing list below.